Following on my post on setting up a platform to get started with data science tools I since have set up a Jupyter based platform for programming Python on Spark.

On top of using Python libraries (like pandas, NumPy, Scikit-Learn, etc) that makes data analysis easier, in this platform I can also use Spark to code applications that run on distributed clusters

This setup has the following benefits

- It is web based, I can work on my projects from anywhere as long as I have a web browser with an internet connection

- It is set up using light weight EC2 instance types (t2.micro) so it is potentially free to run

- The code is hosted on Gitlab and all changes done to the scripts are version controlled using git

- It uses Docker containers, which make the whole process so much easier and quicker than traditional method of installing individual packages on the box

The setup

For this setup I launched a t2.micro (simply because I could run it on a free tier) instance of EC2 on Amazon AWS

I found the jupyter/all-spark-notebook Docker image the simplest to get up and running with.

Once I have launched the instance (running Amazon Linux AMI) I connected to the instance and installed Docker, added the current logged in user (for this AMI is it ec2-user) and started the Docker service

sudo yum install docker

sudo usermod -aG docker $USER

sudo service docker start

The code

I hosted my code on Gitlab, so I created a development directory and cloned my “data-science” repository where I have my scripts

mkdir dev

cd dev/

git clone [email protected]:haddad/data-science.git

Now I had my code in place, the next step was to start the Docker container

The IDE

Starting the Docker container is simply running this

docker run -d --name spark-jupyter \

-v /home/ec2-user/dev/data-science:/home/jovyan/work \

-p 8888:8888 jupyter/all-spark-notebook

The parameters passed in have the following meanings;

- -d - run this container as a daemon (in the background)

- -p - map external port 8888 to port 8888 of the container

- –name - assign a name (spark-jupyter) to this container

- -v - mount volumes, in this case we are mounting the

/home/ec2-user/dev/data-sciencethat we cloned from our git repository to/home/jovyan/workwhere Jupyter expects to find it’s notebooks - jupyter/all-spark-notebook is the image that we are running

Note that since I gave the container a meaningful name, I can stop and start it again in the future simply by running the following commands;

docker stop spark-jupyter

docker start spark-jupyter

Securing the IDE

The Jupyter notebook is launched with a security feature that requires a secret token to grant access to the web based notebook.

This token is dumped to the console when the process starts in the Docker container, to get hold of it checking the container’s logs is required;

[ec2-user@ip-172-31-41-78 ~]$ docker logs spark-jupyter

Execute the command: jupyter notebook

[I 22:46:12.242 NotebookApp] Writing notebook server cookie secret to /home/jovyan/.local/share/jupyter/runtime/notebook_cookie_secret

[W 22:46:12.279 NotebookApp] WARNING: The notebook server is listening on all IP addresses and not using encryption. This is not recommended.

[I 22:46:12.321 NotebookApp] JupyterLab alpha preview extension loaded from /opt/conda/lib/python3.6/site-packages/jupyterlab

[I 22:46:12.328 NotebookApp] Serving notebooks from local directory: /home/jovyan

[I 22:46:12.328 NotebookApp] 0 active kernels

[I 22:46:12.328 NotebookApp] The Jupyter Notebook is running at:

[I 22:46:12.328 NotebookApp] http://[all ip addresses on your system]:8888/?token=XXXXXXXXXXXXXxxxxxxxxxxxxxxXXXXXXXXXXXXXXX

[I 22:46:12.328 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 22:46:12.329 NotebookApp]

To access the web based IDE, I grabbed the public DNS of my EC2 instance from the AWS console and appended :8888/?token=XXXXXXXXXXXXXxxxxxxxxxxxxxxXXXXXXXXXXXXXXX, my URL looked something like this

http://ec2-00-000-00-000.eu-west-1.compute.amazonaws.com:8888/?token=XXXXXXXXXXXXXxxxxxxxxxxxxxxXXXXXXXXXXXXXXX

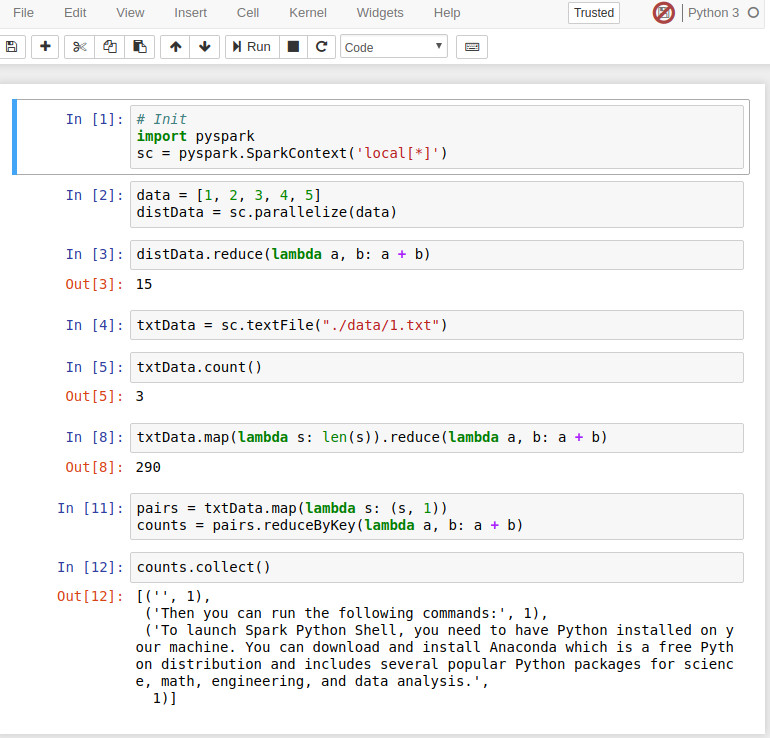

And I could run my pyspark scripts straight away