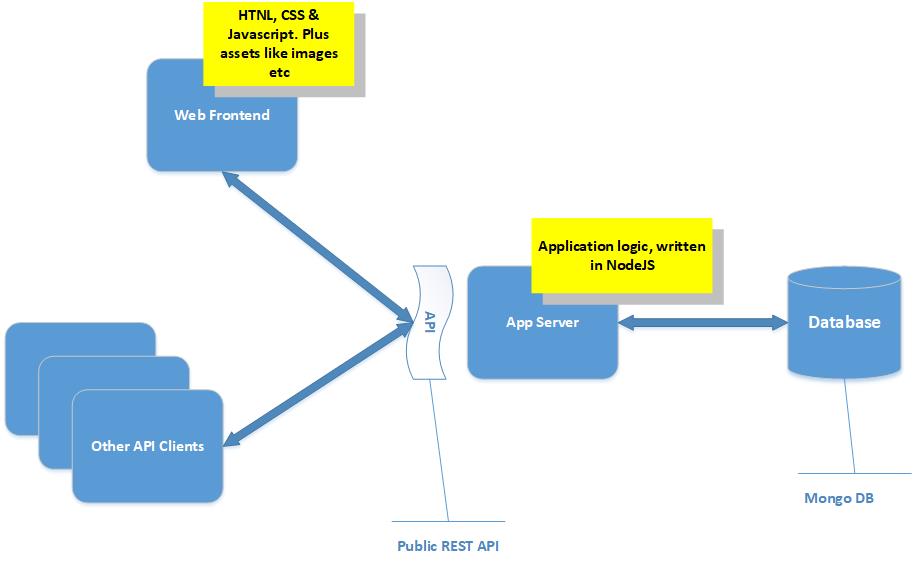

In this series of articles I will discuss how to build a simple web application on cloud native architectures. The application iteslf is relatively simple comprising of a database (I used MongoDB), a backend application that contains (application) business logic written in NodeJS exposong a REST API which is publically accessible, serving a web front end to the application as well as other 3rd party clients.

The drawing below illustrates the architecture.

Basic Architecture

Traditionally the database would run on a server or a cluster, the back end would run as a NodeJS process on a bare metal server or a virtual machine and the front end would run as a static website hosted ona NginX or Apache web server. Scaling would require either running multiple processes for the NodeJS app or running the process in multiple servers while the web front end would be scaled with configuration of Apache or NginX.

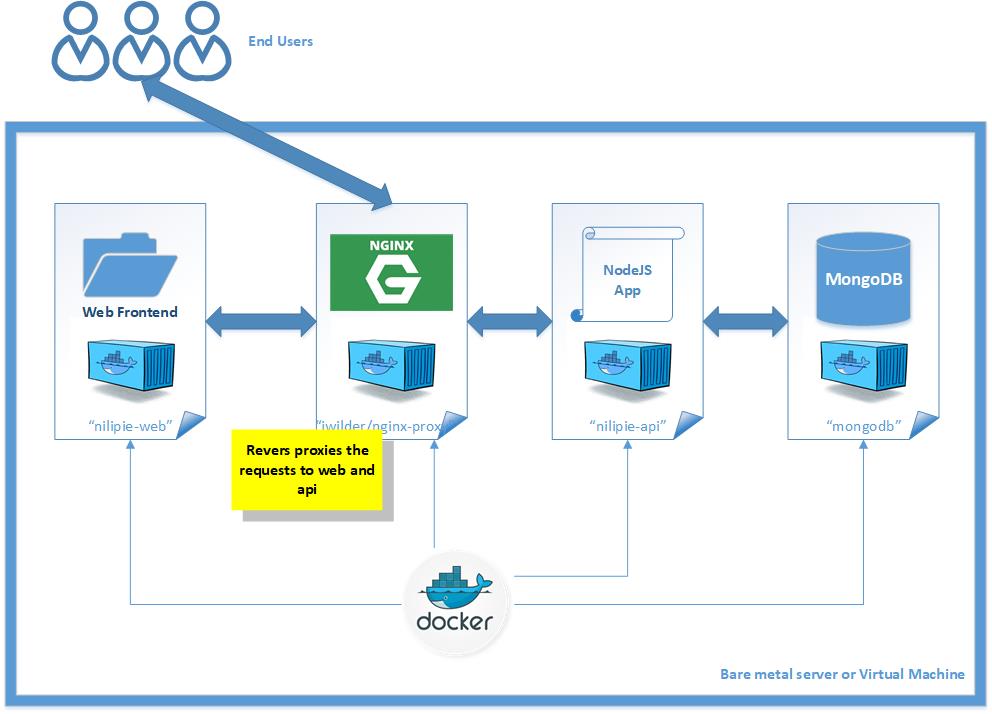

We are going to use Docker where the MongoDB database will run as a container, the backend app will run as a NodeJS in it’s own seperate container and another container to run the web front end. Since these two containers will be exposing custom ports and we want both the API and the web front end to run on port 80 we need another container that would simply be handling requests and forwarding them on to the right container on the right port (a reverse proxy). The architecture looks like this;

Docker Based Architecture

Setting up the database (MongoDB)

To start a Docker conatiner running a MongoDB instance we simply run;

docker run --name mongodb -d mongo:latest

Of course this is not suitable for production environments, it is an easy and quick way of getting an instance of MondoDB without considering issues like reliability, redundancy, security, etc. The container will expose MongoDB on the default port of 27017

Setting up the backend app

This app is runinng as a NodeJS process;

node app.js

With the inclusion of this setting in the file package.json

"scripts": {

"start": "node app.js"

}

the app can be started using yarn with the following command

yarn start

Therefore this Dockerfile is all we need to build a Docker image for this app

FROM node:alpine

WORKDIR /app

ADD . /app

RUN yarn install

EXPOSE 80

CMD ["yarn", "start"]

So we build the Docker image from this Dockerfile, tagging it (naming it) as nilipie-api;

docker build -t nilipie-api .

Sending build context to Docker daemon 13.81MB

Step 1/6 : FROM node:alpine

---> 5ffbcf1d9932

Step 2/6 : WORKDIR /app

---> Using cache

---> 6e10b7f650f5

Step 3/6 : ADD . /app

---> Using cache

---> 446aba5d6d08

Step 4/6 : RUN yarn install

---> Using cache

---> fc342abfb2ba

Step 5/6 : EXPOSE 80

---> Using cache

---> 7fd8c579f8cf

Step 6/6 : CMD ["yarn", "start"]

---> Using cache

---> 8b73abf875f4

Successfully built 8b73abf875f4

Successfully tagged nilipie-api:latest

And now it is available in our list of Docker images;

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nilipie-api latest 5d75f8edae35 34 seconds ago 109MB

The NodeJS app has this code that it uses to connect to the MongoDB;

var mongoose = require('mongoose');

mongoose.Promise = require('q').Promise;

mongoose.connect(process.env.MONGODB_URL, {

useMongoClient: true

});

Basically it is expecting an environment variable called MONGODB_URL containing the URL to a MongoDB instance. So we are going to launch the backend app’s container from the image we just created and giving it the environment variable it needs;

docker run --link=mongodb:mongodb -e MONGODB_URL="mongodb://mongodb:27017/nilipie" -e VIRTUAL_HOST=api.nilipie.com --rm -d nilipie-api

b4b66e3adfd462039d6f24c8b491c5f9d77fb7dad2209210a50ff2858fbcb01d

We are using --link to link the container to the MongodDB conatiner (which we named mongodb) and passing two environment variables to it, one is the required MONGODB_URL and the second one is a parameter needed by the reverse proxy - we’ll discuss this later on.

Setting up the Web Frontend

So now we have the backend running and connected to the database. Next we set up the Web Frontend. This is just static files being served by NginX, the the Dockerfile looks like this;

FROM kyma/docker-nginx

ADD . /var/www

EXPOSE 80

CMD 'nginx'

We build a Docker image from it and tag it as nilipie-web;

docker build -t nilipie-web .

Sending build context to Docker daemon 3.736MB

Step 1/4 : FROM kyma/docker-nginx

---> eb883be3763d

Step 2/4 : ADD . /var/www

---> e630444e2c95

Step 3/4 : EXPOSE 80

---> Running in abc036877a61

Removing intermediate container abc036877a61

---> 3d71dc2435db

Step 4/4 : CMD 'nginx'

---> Running in 5628b1ee3138

Removing intermediate container 5628b1ee3138

---> 5d7d4584eb13

Successfully built 5d7d4584eb13

Successfully tagged nilipie-web:latest

And now we have the image available

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nilipie-web latest 5d7d4584eb13 6 seconds ago 186MB

nilipie-api latest 5d75f8edae35 About an hour ago 109MB

We can just run it using this command

docker run -e VIRTUAL_HOST=www.nilipie.com --rm -d nilipie-web

The Web Frontend interacts with the API, along with other clients, through the URL https://api.nilipie.com. So now we need a reverse proxy that will foward requests to https://www.nilipie.com to the container nilipie-web and requests to https://api.nilipie.com. To do this we use a Docker image from jwilder/nginx-proxy;

docker run -d -p 80:80 -v /var/run/docker.sock:/tmp/docker.sock:ro jwilder/nginx-proxy

Unable to find image 'jwilder/nginx-proxy:latest' locally

latest: Pulling from jwilder/nginx-proxy

be8881be8156: Pull complete

b4babd36efe5: Pull complete

f4eba7658e18: Pull complete

fc141716ac64: Pull complete

87b964c68304: Pull complete

d07092114f4c: Pull complete

5092b1e0c1da: Pull complete

d90a3596290d: Pull complete

5ca9f664a671: Pull complete

eb9b93208683: Pull complete

Digest: sha256:e869d7aea7c5d4bae95c42267d22c913c46afd2dd8113ebe2a24423926ba1fff

Status: Downloaded newer image for jwilder/nginx-proxy:latest

4113a53ac527b89289f757c54e41620351a9b89f1f50843ec682586739cb8f54

This container will listen to all requests on port 80 and forward them to the correct Docker container using the enviroment variable VIRTUAL_HOST that we used for our two containers. So now we have the full architecture up and running, serving requests.

Using Docker Compose

Instead of manually typing docker commands for each of the components of our solution we can use Docker Compose to declaratively define our solution and let Docker Compose handle the fetching and building of images, running and linking of containers etc.

The docker-compose.yml file below defines our solution that we put together above in one single file.

version: '3'

services:

reverse-proxy:

image: "jwilder/nginx-proxy"

ports:

- "80:80"

volumes:

- /var/run/docker.sock:/tmp/docker.sock:ro

depends_on:

- nilipie-web

- nilipie-api

nilipie-web:

build: "./nilipie-web/"

container_name: "nilipie-web"

environment:

- VIRTUAL_HOST=www.nilipie.com

depends_on:

- nilipie-api

nilipie-api:

build: "./nilipie-api/"

container_name: "nilipie-api"

links:

- mongodb:mongodb

environment:

- MONGODB_URL=mongodb://mongodb:27017/nilipie

- VIRTUAL_HOST=api.nilipie.com

depends_on:

- mongodb

mongodb:

image: "mongo:latest"

container_name: "mongodb"

It is pretty much the same stuff we did through the docker run commands with the parameters passed to the comamnd line being defined here in the yaml file. Perhaps something new in this file is the use of depends_on which helps us to define dependencies between the containers in order to control the order of them being launched.

Once this file is in place docker-compose up can be used to start the whole solution;

docker-compose up

Pulling mongodb (mongo:latest)...

latest: Pulling from library/mongo

3b37166ec614: Pull complete

504facff238f: Pull complete

ebbcacd28e10: Pull complete

c7fb3351ecad: Pull complete

2e3debadcbf7: Pull complete

004c7a04feb1: Pull complete

897284d7f640: Pull complete

af4d2dae1422: Pull complete

5e988d91970a: Pull complete

aebe46e3fb07: Pull complete

6e52ad506433: Pull complete

47d2bdbad490: Pull complete

0b15ac2388a7: Pull complete

7b8821d8bba9: Pull complete

Digest: sha256:4ad50a4f3834a4abc47180eb0c5393f09971a935ac3949920545668dd4253396

Status: Downloaded newer image for mongo:latest

Building nilipie-api

Step 1/6 : FROM node:alpine

---> 5ffbcf1d9932

Step 2/6 : WORKDIR /app

---> Running in b2206e737514

Removing intermediate container b2206e737514

---> a0ea9ddfb970

Step 3/6 : ADD . /app

---> 6887de9181ac

Step 4/6 : RUN yarn install

---> Running in eec69a914fb7

yarn install v1.9.4

[1/4] Resolving packages...

[2/4] Fetching packages...

[3/4] Linking dependencies...

[4/4] Building fresh packages...

Done in 5.97s.

Removing intermediate container eec69a914fb7

---> 301f06f88fc0

Step 5/6 : EXPOSE 80

---> Running in b99f7ead1685

Removing intermediate container b99f7ead1685

---> 69fbda258f68

Step 6/6 : CMD ["yarn", "start"]

---> Running in 0609ba2f8d77

Removing intermediate container 0609ba2f8d77

---> 715b4163393c

Successfully built 715b4163393c

Successfully tagged git_nilipie-api:latest

WARNING: Image for service nilipie-api was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`.

Building nilipie-web

Step 1/4 : FROM kyma/docker-nginx

---> eb883be3763d

Step 2/4 : ADD . /var/www

---> ce5452932173

Step 3/4 : EXPOSE 80

---> Running in 67590d4feafb

Removing intermediate container 67590d4feafb

---> d9ca6919ef22

Step 4/4 : CMD 'nginx'

---> Running in b108e6f60d1e

Removing intermediate container b108e6f60d1e

---> 62c69deb61d6

Successfully built 62c69deb61d6

Successfully tagged git_nilipie-web:latest

WARNING: Image for service nilipie-web was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`.

Pulling reverse-proxy (jwilder/nginx-proxy:)...

latest: Pulling from jwilder/nginx-proxy

be8881be8156: Pull complete

b4babd36efe5: Pull complete

f4eba7658e18: Pull complete

fc141716ac64: Pull complete

87b964c68304: Pull complete

d07092114f4c: Pull complete

5092b1e0c1da: Pull complete

d90a3596290d: Pull complete

5ca9f664a671: Pull complete

eb9b93208683: Pull complete

Digest: sha256:e869d7aea7c5d4bae95c42267d22c913c46afd2dd8113ebe2a24423926ba1fff

Status: Downloaded newer image for jwilder/nginx-proxy:latest

Creating mongodb ... done

Creating nilipie-api ... done

Creating nilipie-web ... done

Creating git_reverse-proxy_1 ... done

Attaching to mongodb, nilipie-api, nilipie-web, git_reverse-proxy_1

mongodb | 2018-10-12T13:56:25.584+0000 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] MongoDB starting : pid=1 port=27017 dbpath=/data/db 64-bit host=0652126ade6a

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] db version v4.0.3

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] git version: 7ea530946fa7880364d88c8d8b6026bbc9ffa48c

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] OpenSSL version: OpenSSL 1.0.2g 1 Mar 2016

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] allocator: tcmalloc

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] modules: none

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] build environment:

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] distmod: ubuntu1604

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] distarch: x86_64

mongodb | 2018-10-12T13:56:25.593+0000 I CONTROL [initandlisten] target_arch: x86_64

mongodb | 2018-10-12T13:56:25.594+0000 I CONTROL [initandlisten] options: { net: { bindIpAll: true } }

mongodb | 2018-10-12T13:56:25.594+0000 I STORAGE [initandlisten]

mongodb | 2018-10-12T13:56:25.594+0000 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

mongodb | 2018-10-12T13:56:25.594+0000 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem

mongodb | 2018-10-12T13:56:25.594+0000 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=4467M,session_max=20000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000),statistics_log=(wait=0),verbose=(recovery_progress),

mongodb | 2018-10-12T13:56:26.408+0000 I STORAGE [initandlisten] WiredTiger message [1539352586:408380][1:0x7f55577e2a00], txn-recover: Set global recovery timestamp: 0

mongodb | 2018-10-12T13:56:26.421+0000 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0)

mongodb | 2018-10-12T13:56:26.438+0000 I CONTROL [initandlisten]

mongodb | 2018-10-12T13:56:26.438+0000 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

mongodb | 2018-10-12T13:56:26.438+0000 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

mongodb | 2018-10-12T13:56:26.438+0000 I CONTROL [initandlisten]

mongodb | 2018-10-12T13:56:26.438+0000 W CONTROL [initandlisten]

mongodb | 2018-10-12T13:56:26.438+0000 W CONTROL [initandlisten]

mongodb | 2018-10-12T13:56:26.438+0000 I CONTROL [initandlisten]

mongodb | 2018-10-12T13:56:26.438+0000 I STORAGE [initandlisten] createCollection: admin.system.version with provided UUID: b017f0f6-f3f9-4f0d-9815-4bf7f2b2fac6

mongodb | 2018-10-12T13:56:26.599+0000 I COMMAND [initandlisten] setting featureCompatibilityVersion to 4.0

mongodb | 2018-10-12T13:56:26.606+0000 I STORAGE [initandlisten] createCollection: local.startup_log with generated UUID: af2233c7-6313-4c2c-a3c6-eb7faf323a61

mongodb | 2018-10-12T13:56:26.645+0000 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory '/data/db/diagnostic.data'

nilipie-api | yarn run v1.9.4

mongodb | 2018-10-12T13:56:26.649+0000 I NETWORK [initandlisten] waiting for connections on port 27017

mongodb | 2018-10-12T13:56:26.649+0000 I STORAGE [LogicalSessionCacheRefresh] createCollection: config.system.sessions with generated UUID: bda8656e-4ea1-4df6-ba39-13a30467210d

nilipie-api | $ node app.js

mongodb | 2018-10-12T13:56:26.692+0000 I INDEX [LogicalSessionCacheRefresh] build index on: config.system.sessions properties: { v: 2, key: { lastUse: 1 }, name: "lsidTTLIndex", ns: "config.system.sessions", expireAfterSeconds: 1800 }

mongodb | 2018-10-12T13:56:26.692+0000 I INDEX [LogicalSessionCacheRefresh] building index using bulk method; build may temporarily use up to 500 megabytes of RAM

nilipie-api | DB connected succesfully

nilipie-api | Nilipie API listening at http://:::80

reverse-proxy_1 | WARNING: /etc/nginx/dhparam/dhparam.pem was not found. A pre-generated dhparam.pem will be used for now while a new one

reverse-proxy_1 | is being generated in the background. Once the new dhparam.pem is in place, nginx will be reloaded.

mongodb | 2018-10-12T13:56:26.693+0000 I INDEX [LogicalSessionCacheRefresh] build index done. scanned 0 total records. 0 secs

reverse-proxy_1 | forego | starting dockergen.1 on port 5000

mongodb | 2018-10-12T13:56:27.908+0000 I NETWORK [listener] connection accepted from 172.18.0.3:56082 #1 (1 connection now open)

reverse-proxy_1 | forego | starting nginx.1 on port 5100

mongodb | 2018-10-12T13:56:27.926+0000 I NETWORK [conn1] received client metadata from 172.18.0.3:56082 conn1: { driver: { name: "nodejs", version: "2.2.27" }, os: { type: "Linux", name: "linux", architecture: "x64", version: "4.9.93-linuxkit-aufs" }, platform: "Node.js v10.10.0, LE, mongodb-core: 2.1.11" }

reverse-proxy_1 | dockergen.1 | 2018/10/12 13:56:28 Generated '/etc/nginx/conf.d/default.conf' from 26 containers

reverse-proxy_1 | dockergen.1 | 2018/10/12 13:56:28 Running 'nginx -s reload'

reverse-proxy_1 | dockergen.1 | 2018/10/12 13:56:28 Watching docker events

reverse-proxy_1 | dockergen.1 | 2018/10/12 13:56:28 Contents of /etc/nginx/conf.d/default.conf did not change. Skipping notification 'nginx -s reload'

reverse-proxy_1 | 2018/10/12 13:56:29 [notice] 52#52: signal process started

reverse-proxy_1 | Generating DH parameters, 2048 bit long safe prime, generator 2

reverse-proxy_1 | This is going to take a long time

reverse-proxy_1 | dhparam generation complete, reloading nginx

As you can see from the verbose output above Docker Compose handled all the required work to have the solution running as declared in the definiton file. After the process was completed we had all the four containers we previously had running

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

de095a776d58 jwilder/nginx-proxy "/app/docker-entrypo…" 3 minutes ago Up 3 minutes 0.0.0.0:80->80/tcp

git_reverse-proxy_1

193fe8d75817 git_nilipie-web "/bin/sh -c 'nginx'" 3 minutes ago Up 3 minutes 80/tcp, 443/tcp

nilipie-web

0c14ba8150dc git_nilipie-api "yarn start" 3 minutes ago Up 3 minutes 80/tcp

nilipie-api

0652126ade6a mongo:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 27017/tcp

mongodb

So we now have a relatively good starting point for a cloud native architecture for our web app. However while this works fine as a set-up for a development machine or perhaps a single VM instance, there are still a lot of improvements to be made to make the architecture scale well in a cloud environment. So far we have built Docker images from the source code, ideally we should have a repository of the images so that they can be pulled from any machine that is about to run the conteiners, so that’s what we will look at in the next part of this series.

comments powered by Disqus